What Is Pressure? A Beginner’s Guide for Instrumentation Students

For anyone embarking on a journey into the world of instrumentation and control, understanding the concept of pressure is as fundamental as a musician learning their scales. Pressure is one of the most commonly measured variables in countless industrial processes, from the roaring furnaces of a steel mill to the sterile environments of a pharmaceutical laboratory. This comprehensive guide will walk you through the essential principles of pressure, its various forms, the units used to measure it, the instruments that sense it, and its critical applications in the real world.

Section 1: The Fundamental Concept of Pressure

At its core, pressure is a simple yet powerful concept. It is defined as the force exerted perpendicularly on a surface per unit of area. Imagine a book resting on a table. The book’s weight exerts a force on the table’s surface. The pressure is that force distributed over the area where the book is in contact with the table.

The mathematical representation of this relationship is expressed by the formula:

P=AF

Where:

- P is the pressure

- F is the force applied

- A is the area over which the force is distributed

From this formula, we can deduce a crucial relationship: for a given force, the smaller the area of application, the greater the pressure. This is why a sharp knife cuts more effectively than a dull one – the force from your hand is concentrated onto a very small area, creating immense pressure.

Pressure is not limited to solids. It is a fundamental property of fluids – both liquids and gases. In a container filled with a fluid, the molecules are in constant, random motion, colliding with each other and the walls of the container. These countless collisions exert a force on the container walls, resulting in pressure.

Section 2: The Language of Pressure: Understanding the Units

The world of instrumentation uses a variety of units to quantify pressure, each with its own historical context and common applications. For an aspiring instrumentation professional, fluency in these units and their conversions is essential.

Here are some of the most common pressure units:

- Pascal (Pa): The SI (International System of Units) unit of pressure. One Pascal is equal to one Newton of force applied over an area of one square meter (N/m²). Due to its small magnitude, kilopascals (kPa) and megapascals (MPa) are more commonly used in industrial settings.

- Pounds per Square Inch (psi): The standard unit of pressure in the United States customary system. It is widely used in industries like oil and gas, and for everyday applications like tire pressure.

- Bar: A metric unit of pressure, though not an SI unit. One bar is approximately equal to the atmospheric pressure at sea level (1 bar = 100,000 Pa). It is a convenient unit as it is close to one atmosphere.

- Atmosphere (atm): A unit of pressure defined as the average atmospheric pressure at mean sea level. 1 atm is equivalent to 101,325 Pa or approximately 14.7 psi.

- Torr: A unit of pressure named after Evangelista Torricelli, the inventor of the barometer. It is defined as 1/760 of a standard atmosphere. Torr is commonly used for measuring vacuum pressures.

- Inches of Water Column (inWC or “WC): A unit often used for measuring low pressures, such as in HVAC systems and cleanrooms. It represents the pressure exerted by a column of water one inch high.

- Millimeters of Mercury (mmHg): Similar to inches of water column, this unit represents the pressure exerted by a column of mercury one millimeter high. It is historically significant due to its use in mercury barometers and is still used in the medical field for blood pressure measurement.

Pressure Unit Conversion Table:

| Unit | Pascal (Pa) | psi | bar | atm |

|---|---|---|---|---|

| 1 Pa | 1 | 0.000145 | 0.00001 | 0.00000987 |

| 1 psi | 6,894.76 | 1 | 0.0689476 | 0.068046 |

| 1 bar | 100,000 | 14.5038 | 1 | 0.986923 |

| 1 atm | 101,325 | 14.6959 | 1.01325 | 1 |

Section 3: The Four Flavors of Pressure: Absolute, Gauge, Differential, and Vacuum

When we talk about measuring pressure, it’s not as simple as just getting a single number. The value we measure is often relative to a reference pressure. This leads to four important types of pressure measurement that every instrumentation student must understand.

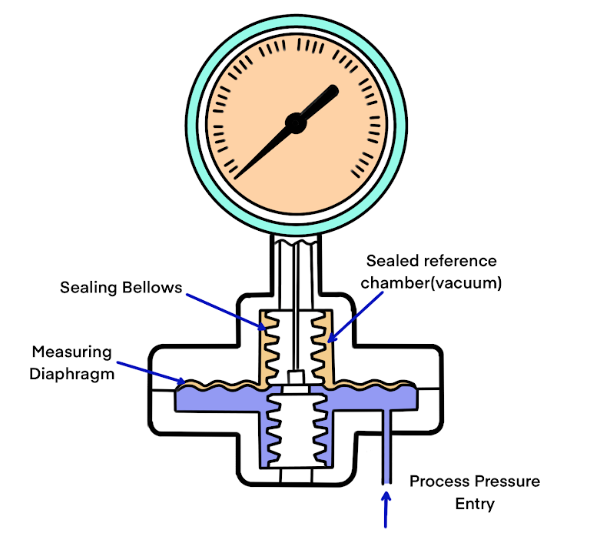

1. Absolute Pressure

Absolute pressure is measured relative to a perfect vacuum, which is a space completely devoid of any matter. In a perfect vacuum, the absolute pressure is zero. Therefore, absolute pressure measurements are always positive.

Real-world example: Weather forecasting relies on absolute atmospheric pressure readings. A barometer measures the absolute pressure of the atmosphere.

Block Diagram of an Absolute Pressure Sensor:

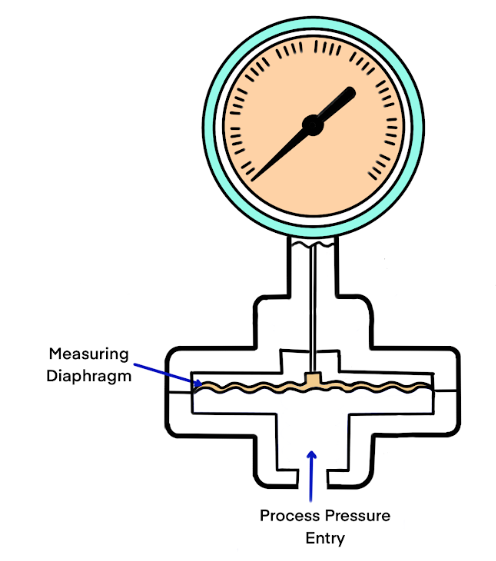

2. Gauge Pressure

Gauge pressure is the pressure measured relative to the local atmospheric pressure. This is the most common type of pressure measurement. A gauge pressure reading of zero indicates that the pressure is the same as the surrounding atmospheric pressure. Gauge pressure can be positive (higher than atmospheric pressure) or negative (lower than atmospheric pressure).

Real-world example: The pressure you measure in your car tires is gauge pressure. A reading of 32 psi means the pressure inside the tire is 32 psi above the local atmospheric pressure.

Block Diagram of a Gauge Pressure Sensor:

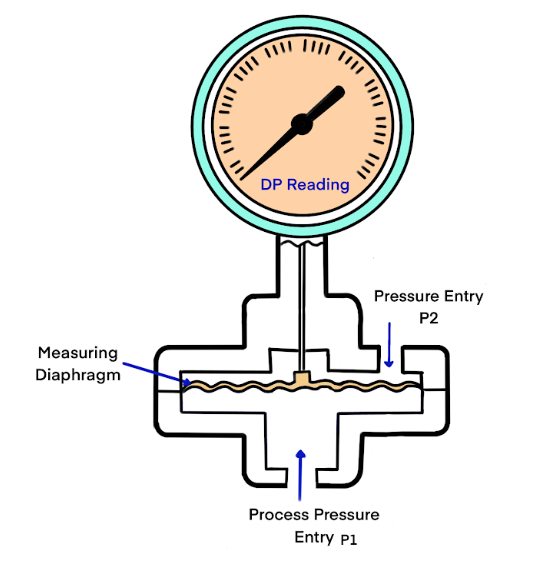

3. Differential Pressure

Differential pressure is simply the difference in pressure between two separate points. This measurement is crucial for determining flow rates, fluid levels, and filter blockages.

Real-world example: An orifice plate flowmeter works by creating a pressure drop in a pipe. A differential pressure transmitter measures the pressure before and after the orifice plate, and this difference is used to calculate the flow rate of the fluid.

Block Diagram of a Differential Pressure Sensor:

4. Vacuum Pressure

Vacuum pressure is a pressure that is lower than the local atmospheric pressure. It is essentially a negative gauge pressure. Vacuum measurements are often expressed in units of Torr or inches of mercury.

Real-world example: Vacuum packaging machines remove air from a package before sealing it to preserve food. The level of vacuum inside the package is a critical parameter.

Section 4: The Heart of Measurement: Pressure Sensors and Transmitters

At the heart of any pressure measurement system lies a sensor, a device that converts the physical quantity of pressure into a measurable electrical signal. This signal is then often conditioned and transmitted by a transmitter. Let’s explore some of the most common pressure sensing technologies.

1. Strain Gauge Sensors

Strain gauge sensors are one of the most widely used types of pressure sensors. They operate on the principle that the electrical resistance of a material changes when it is stretched or compressed.

How it works: A flexible diaphragm is exposed to the process pressure. As the pressure changes, the diaphragm deflects, causing a strain gauge bonded to it to stretch or compress. This change in the strain gauge’s resistance is measured by a Wheatstone bridge circuit, which produces an output voltage proportional to the pressure.

Block Diagram of a Strain Gauge Pressure Sensor:

2. Capacitive Sensors

Capacitive pressure sensors are known for their high accuracy and stability. They measure pressure by detecting changes in capacitance.

How it works: A capacitive sensor consists of two parallel conductive plates separated by a dielectric material. One of the plates is a flexible diaphragm that moves in response to pressure changes. This movement alters the distance between the plates, which in turn changes the capacitance of the sensor. An electronic circuit measures this change in capacitance and converts it into a pressure reading.

Block Diagram of a Capacitive Pressure Sensor:

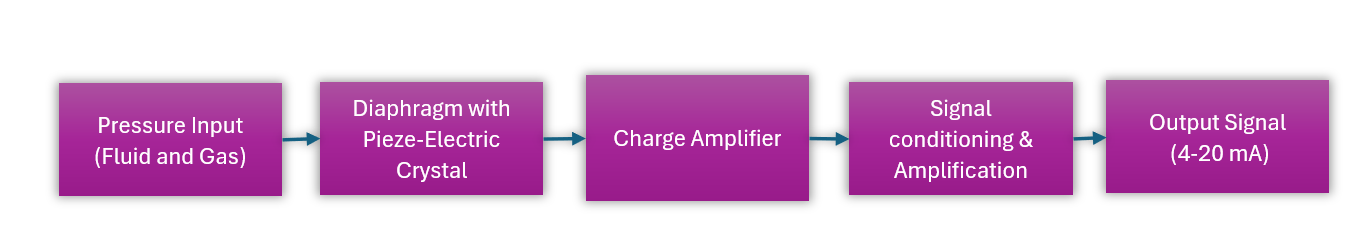

3. Piezoelectric Sensors

Piezoelectric sensors are ideal for measuring dynamic or rapidly changing pressures. They utilize the piezoelectric effect, where certain crystalline materials generate an electrical charge in response to mechanical stress.

How it works: A piezoelectric crystal is placed in contact with a diaphragm that is exposed to the process pressure. When the pressure fluctuates, it applies a force to the crystal, causing it to generate a proportional electrical charge. This charge is then amplified and converted into a voltage signal. Piezoelectric sensors are not suitable for measuring static (unchanging) pressures because the generated charge dissipates over time.

Block Diagram of a Piezoelectric Pressure Sensor:

From Sensor to System: The Role of Pressure Transmitters

A pressure sensor is the primary sensing element. However, in an industrial setting, the raw electrical signal from the sensor is often weak and susceptible to noise. This is where a pressure transmitter comes in.

A pressure transmitter takes the low-level signal from the sensor and performs several crucial functions:

- Amplification: It boosts the weak sensor signal to a more robust level.

- Linearization: It corrects for any non-linearities in the sensor’s output.

- Temperature Compensation: It mitigates the effects of temperature variations on the sensor’s accuracy.

- Signal Conversion: It converts the signal into a standard industrial format, such as a 4-20 mA current loop or a digital signal (e.g., HART, Foundation Fieldbus). This standardized signal can be reliably transmitted over long distances to a control system or display.

Section 5: Putting Pressure to Work: Industrial Applications

The ability to accurately measure and control pressure is fundamental to the safety, efficiency, and quality of countless industrial processes. Here are a few examples:

- Process Control: In chemical plants and refineries, pressure is a critical parameter for controlling reactions, distillation columns, and other processes. Maintaining the correct pressure ensures optimal product yield and prevents hazardous situations.

- Flow Measurement: As mentioned earlier, differential pressure transmitters are widely used to measure the flow rate of liquids and gases in pipes.

- Level Measurement: The pressure at the bottom of a tank is directly proportional to the height of the liquid in it. This principle is used for measuring the level of liquids in open and closed tanks. A differential pressure transmitter can be used for level measurement in a pressurized vessel.

- HVAC Systems: Pressure sensors are used to monitor and control air and refrigerant pressures in heating, ventilation, and air conditioning systems to ensure occupant comfort and energy efficiency.

- Aerospace: In aircraft, pressure sensors are critical for monitoring cabin pressure, hydraulic systems, and engine performance. Altimeters in aircraft are essentially sophisticated absolute pressure sensors.

- Biomedical: Pressure sensors are used in a wide range of medical devices, including blood pressure monitors, ventilators, and infusion pumps.

Section 6: The Importance of Accuracy: An Introduction to Pressure Calibration

In the world of instrumentation, an inaccurate measurement can be worse than no measurement at all. It can lead to poor product quality, process inefficiencies, and even catastrophic failures. This is why the calibration of pressure instruments is of paramount importance.

What is Calibration?

Calibration is the process of comparing the readings of an instrument under test against a known standard of higher accuracy. The purpose of calibration is to determine the accuracy of the instrument and to make adjustments if it is found to be out of tolerance.

Why is Calibration Necessary?

Over time, the performance of pressure instruments can drift due to factors like mechanical wear, temperature changes, and exposure to harsh process conditions. Regular calibration ensures that the instrument continues to provide accurate and reliable measurements.

The Calibration Process in a Nutshell:

- Isolate the Instrument: The pressure instrument is safely disconnected from the process.

- Connect to a Calibrator: The instrument is connected to a pressure calibrator, which can generate a precise and known pressure. A high-accuracy reference standard is also connected to measure the applied pressure accurately.

- Perform a Multi-Point Check: The calibrator applies pressure at several points across the instrument’s range (e.g., 0%, 25%, 50%, 75%, and 100%). At each point, the reading of the instrument under test is compared to the reference standard.

- As-Found and As-Left Data: The initial readings are recorded as “as-found” data. If the instrument is found to be out of tolerance, adjustments are made (zero and span adjustments), and the calibration check is repeated. The final readings are recorded as “as-left” data.

- Documentation: A calibration certificate is generated, documenting the results, the standards used, and the date of calibration.

Conclusion: Your Foundation in Pressure Measurement

For the instrumentation student, a solid grasp of pressure is a non-negotiable prerequisite for a successful career. From the fundamental physics of force and area to the sophisticated technologies of modern pressure transmitters, this guide has provided a comprehensive overview of this vital process variable.

As you continue your studies and move into practical applications, you will build upon this foundational knowledge. You will learn about more advanced sensor technologies, complex control strategies, and the intricacies of working with pressure in hazardous environments. But the core principles you’ve learned here will remain your constant companions, enabling you to confidently navigate the dynamic and rewarding field of instrumentation.