The Critical Role of Human Factors in Functional Safety Design

In the intricate world of industrial automation and safety-critical systems, we place immense faith in the reliability of technology. We engineer sophisticated safety instrumented systems (SIS), write countless lines of code, and perform rigorous testing to prevent catastrophic failures. Yet, a ghost in the machine persistently undermines our best efforts: the human element. From the control room operator to the maintenance technician, human actions, decisions, and even inactions are a pivotal and often underestimated factor in the functional safety of any system. This blog post delves into the critical role of human factors in functional safety design, exploring why a human-centric approach is not just beneficial, but absolutely essential for achieving true safety.

The Illusion of Purely Technical Safety

For decades, the primary focus of functional safety has been on the technical aspects of safety systems. Standards like IEC 61508, the foundational standard for functional safety, and its process industry derivative, IEC 61511, provide a robust framework for managing the lifecycle of safety systems. They address hardware reliability, software integrity, and systematic capability, all aimed at ensuring a system will perform its required safety function when a demand occurs.

However, a stark reality emerges from the annals of industrial accidents. The Chernobyl disaster, the Bhopal gas tragedy, and the Tokaimura criticality accident all share a common, tragic thread: human error was a significant contributing factor. These were not simply failures of technology, but failures of the human-machine interface, of procedures, of training, and of organizational culture. They serve as grim reminders that a safety system is only as effective as the people who design, operate, and maintain it.

This is where the discipline of human factors, also known as ergonomics, comes into play. Human factors is the scientific discipline concerned with the understanding of interactions among humans and other elements of a system, and the profession that applies theory, principles, data, and methods to design in order to optimize human well-being and overall system performance. In the context of functional safety, it’s about designing systems, tasks, and environments that are compatible with human capabilities and limitations.

The Anatomy of Human Error: More Than Just a “Mistake”

To effectively integrate human factors into functional safety design, we must first understand the nature of human error. It’s not a monolithic concept. Dr. James Reason, a pioneer in the field, categorized human failures into three main types:

Slips and Lapses: These are errors in execution, where the intention is correct, but the action is not performed as planned. A classic example is an operator intending to close valve A but inadvertently closing the adjacent valve B. These are often caused by distractions, fatigue, or a poorly designed user interface.

Mistakes: These are errors in intention, where the chosen plan is inadequate to achieve the desired outcome. A mistake might involve an engineer misinterpreting a design specification or an operator incorrectly diagnosing a fault condition based on the available information. These often stem from insufficient knowledge, inadequate training, or flawed procedures.

Violations: These are deliberate deviations from established rules and procedures. While some violations can be malicious, most are routine or optimizing, where individuals believe the prescribed way of working is inefficient or unnecessary. This often points to a disconnect between the procedures as written and the practical realities of the work.

Recognizing these different types of errors is crucial. A slip might be addressed through better HMI design, while a mistake might require improved training and clearer procedures. Violations often signal deeper issues with the safety culture and the practicality of the established rules.

Integrating Human Factors into the Functional Safety Lifecycle

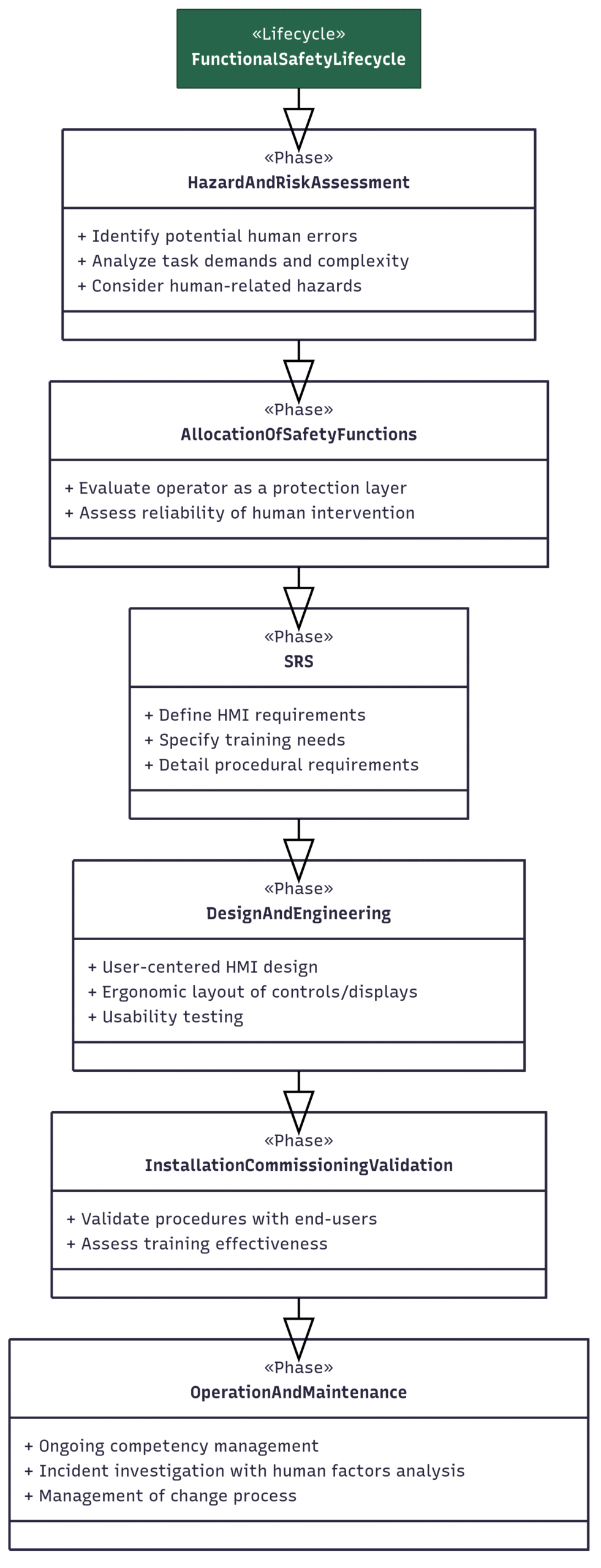

The key to effectively managing human factors is to embed them throughout the entire functional safety lifecycle, from initial conception to decommissioning. It cannot be an afterthought or a “bolt-on” solution. The following block diagram illustrates how human factors activities can be integrated into the phases of the IEC 61508/61511 safety lifecycle.

Figure 1: Integrating Human Factors into the Functional Safety Lifecycle

As this diagram shows, human factors considerations are not a separate stream of activity but are woven into the fabric of each stage of the safety lifecycle. During the initial hazard and risk assessment, for example, a human factors specialist can help identify potential human errors that could lead to a hazardous event. When specifying the safety requirements, the needs of the human operators and maintenance personnel must be explicitly defined.

A Deeper Dive: Methodologies for Human-Centered Safety

Two powerful methodologies for systematically addressing human factors are Task Analysis and Human Reliability Analysis (HRA).

Task Analysis is a systematic process of breaking down a task into its constituent steps to understand the cognitive and physical demands it places on an individual. This analysis helps to identify potential error traps, points of high workload, and opportunities for design improvement. For instance, a task analysis of a startup procedure might reveal a confusing sequence of steps or an HMI that provides ambiguous feedback, both of which could be redesigned to reduce the likelihood of error.

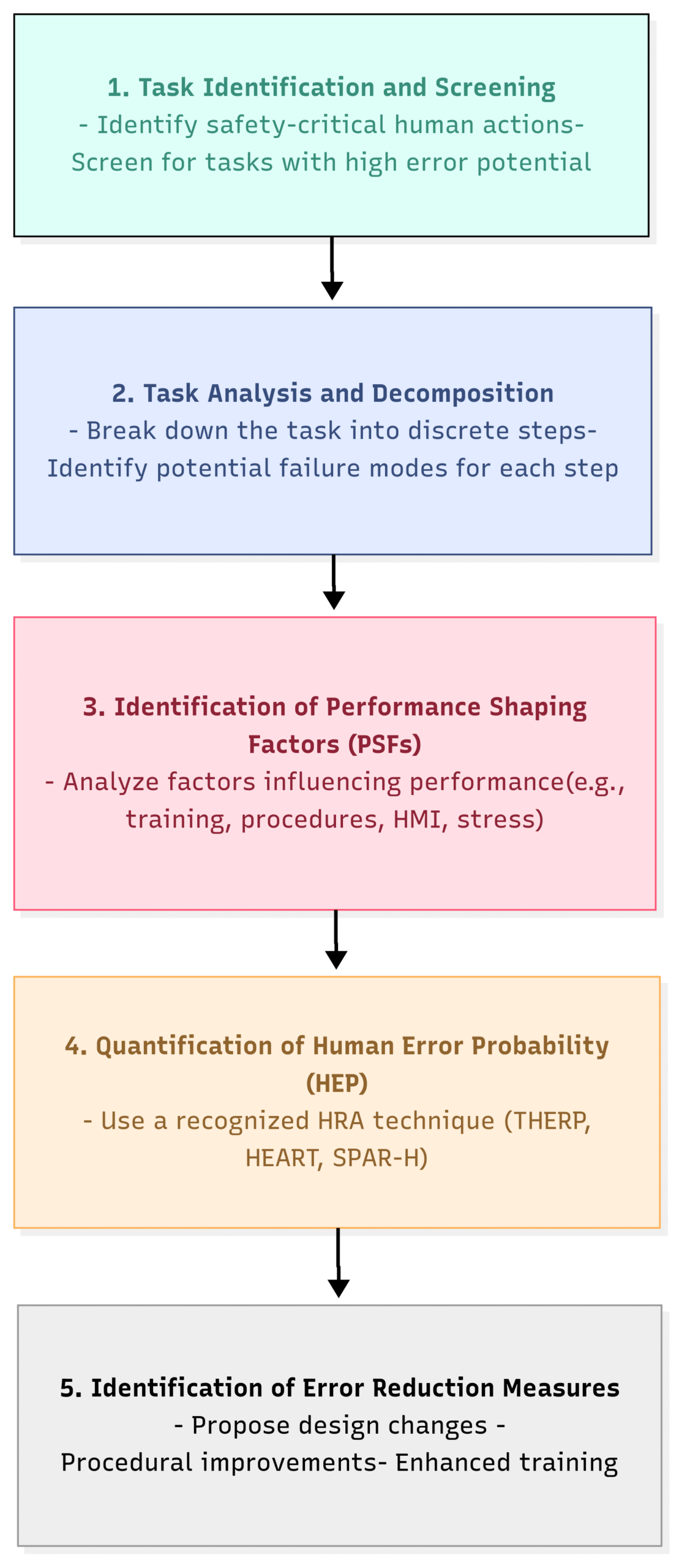

Human Reliability Analysis (HRA) is a quantitative method used to estimate the probability of human error in a given task. HRA is particularly valuable for assessing the reliability of human actions that are credited as safety layers in a Layers of Protection Analysis (LOPA). The results of an HRA can help to determine if a human response is a sufficiently reliable protection measure or if an engineered solution is required. The following block diagram outlines a typical HRA process.

Figure 2: The Human Reliability Analysis (HRA) Process

The HRA process provides a structured way to move from a qualitative understanding of potential human errors to a quantitative assessment of their likelihood. This allows for more informed risk-based decision-making.

The Real-World Impact: Lessons from Tokaimura

The 1999 Tokaimura criticality accident in Japan is a harrowing example of what can happen when human factors are neglected. Workers at a nuclear fuel processing facility deviated from approved procedures, using buckets to mix a highly enriched uranium solution in a precipitation tank that was not designed for such a process. This led to an uncontrolled nuclear fission chain reaction, resulting in two deaths and exposing hundreds to radiation.

A human factors analysis of this event would reveal a cascade of failures:

Inadequate Procedures: The approved procedures were cumbersome and not well understood by the workers, leading them to develop their own “more efficient” but dangerously unsafe methods.

Insufficient Training: The workers did not have a deep understanding of the physics of criticality and the severe consequences of their actions.

Poor Design: The design of the workspace did not prevent the use of inappropriate equipment for the task.

Organizational Failures: Management failed to enforce safety procedures and foster a strong safety culture.

The Tokaimura accident tragically underscores that even with the most sophisticated safety systems, a failure to consider the human element can lead to catastrophic consequences.

The Benefits of a Human-Centered Approach

Integrating human factors into functional safety design is not just about preventing accidents; it also yields significant operational benefits:

Reduced Human Error: By designing systems that are intuitive and forgiving, we can significantly reduce the likelihood of slips, lapses, and mistakes.

Improved Efficiency and Productivity: Well-designed interfaces and procedures can streamline workflows, reduce workload, and improve overall operational efficiency.

Enhanced Operator Situation Awareness: A clear and well-organized HMI provides operators with the information they need to make timely and effective decisions, especially during abnormal situations.

Increased System Resilience: By understanding and mitigating potential human failures, we create systems that are more resilient to unexpected events.

Stronger Safety Culture: A focus on human factors demonstrates a commitment to the well-being of employees, which can foster a more positive and proactive safety culture.

The Path Forward: A Call for a Paradigm Shift

The journey towards truly safe systems requires a paradigm shift in how we approach functional safety design. We must move beyond a purely technical focus and embrace a more holistic, human-centric perspective. This means:

Investing in Human Factors Expertise: Organizations should ensure they have access to qualified human factors professionals who can contribute throughout the safety lifecycle.

Making Human Factors a Core Competency: Engineers, safety professionals, and managers should receive training in the fundamentals of human factors.

Prioritizing Usability: The usability of HMIs, procedures, and tools should be a key design consideration, not an afterthought.

Learning from Experience: A robust incident investigation process that includes a thorough human factors analysis is essential for continuous improvement.

The human element is not a liability to be engineered out of our systems, but an integral part of their operation. By understanding human capabilities and limitations and designing our systems accordingly, we can move beyond simply managing risk to creating environments where humans and technology can work together safely and effectively. The unseen architect of safety is, and always will be, the thoughtful consideration of the human who stands at the heart of the system.