AI and Functional Safety: Opportunities and Challenges

The quiet hum of an electric vehicle gliding through city streets, the seamless operation of a complex manufacturing robot, and the unerring accuracy of a surgical assistant – these are just a few glimpses into a world increasingly powered by Artificial Intelligence (AI). As AI continues its relentless march from the digital realm into our physical world, it intersects with a discipline where failure is not an option: functional safety. This critical engineering practice is the bedrock of systems that, if they malfunction, can lead to catastrophic consequences, including injury or loss of life.

The convergence of AI’s probabilistic, data-driven nature with the deterministic, rule-based world of functional safety presents a fascinating and complex dichotomy. On one hand, AI offers unprecedented opportunities to enhance safety, to foresee and prevent accidents with a level of insight that has never been possible. On the other, the very characteristics that make AI so powerful – its ability to learn and adapt – introduce formidable challenges to the established principles of safety certification. This blog post will delve into this critical intersection, exploring the immense potential of AI to revolutionize functional safety, the significant hurdles we must overcome, and the path forward to a future where intelligence and safety are not just compatible, but synergistic.

The Bright Side: Opportunities for AI in Functional Safety

For decades, functional safety has relied on meticulous, human-driven processes of hazard analysis, risk assessment, and the implementation of safety measures. While effective, these methods can be limited by the scope of human imagination and the sheer complexity of modern systems. AI, with its ability to process vast amounts of data and identify subtle patterns, offers a powerful toolkit to augment and enhance these traditional approaches.

Enhanced Hazard Identification and Risk Assessment

Traditional hazard analysis often relies on established checklists, historical data from similar systems, and the expertise of safety engineers. AI can supercharge this process by analyzing a much broader and more diverse set of data streams. Imagine an AI system sifting through terabytes of operational data from an entire fleet of autonomous vehicles, cross-referencing it with near-miss incident reports, weather data, and traffic patterns. This comprehensive analysis can uncover novel hazard scenarios – the “unknown unknowns” – that would be nearly impossible for a human team to predict. Furthermore, AI-powered predictive analytics can not only identify potential hazards but also model their likelihood and potential severity with greater accuracy, enabling more informed risk-based decision-making.

Proactive and Predictive Maintenance

Functional safety is not just about preventing failures in the design phase; it’s also about ensuring continued safe operation throughout a system’s lifecycle. Traditional maintenance schedules are often based on fixed time intervals or usage metrics, which can lead to unnecessary downtime or, conversely, a failure to address a component that is degrading faster than expected. AI is set to revolutionize this with predictive maintenance. By continuously monitoring the health of system components through sensor data (e.g., vibration, temperature, acoustic signatures), AI algorithms can detect the subtle signs of impending failure long before they become critical. This allows for maintenance to be scheduled precisely when needed, preventing safety-critical failures, reducing costs, and increasing system availability.

Intelligent Safety Monitoring and Control

AI can act as a vigilant, ever-present co-pilot, monitoring not just the system itself but also its interaction with the environment and human operators. In the cockpit of an advanced aircraft, an AI system could monitor the pilot for signs of fatigue or cognitive overload and suggest a less demanding flight path or even take temporary control to prevent an unsafe maneuver. In a complex industrial plant, an AI-powered vision system could detect if a worker has entered a hazardous area without the proper personal protective equipment and automatically halt the machinery in that zone. This real-time, context-aware monitoring can provide a dynamic layer of safety that adapts to changing conditions.

Automated Safety Validation and Verification

The validation and verification (V&V) process is a cornerstone of functional safety, ensuring that a system meets its specified safety requirements. This is often a laborious and time-consuming process. AI has the potential to automate significant portions of the V&V workflow. For instance, AI algorithms can be used to generate more comprehensive and targeted test cases, ensuring that the system is tested under a wider range of conditions. AI can also be employed to analyze the vast amounts of data generated during testing, helping to identify anomalies and potential safety vulnerabilities more efficiently.

Block Diagram 1: AI-Powered Predictive Safety System

To visualize how these opportunities come together, consider the following conceptual block diagram of an AI-powered predictive safety system:

In this diagram, a variety of data sources continuously feed into an AI/ML model. This model, trained on historical and real-time data, identifies potential safety issues and generates actionable outputs. These outputs are then presented to a central safety management system, where human experts can make informed decisions, thus creating a proactive and data-driven safety ecosystem.

The Hurdles: Challenges of Integrating AI into Functional Safety

Despite the immense opportunities, the path to integrating AI into safety-critical systems is fraught with significant challenges. These challenges stem from the fundamental differences between the data-driven, probabilistic nature of AI and the deterministic, evidence-based principles of traditional functional safety.

The “Black Box” Problem and Explainable AI (XAI)

Perhaps the most significant challenge is the “black box” nature of many advanced AI models, particularly deep neural networks. While these models can achieve remarkable performance, their internal decision-making processes can be incredibly complex and opaque, even to their creators. This lack of transparency is in direct opposition to the core tenets of functional safety, which demand that a system’s behavior be understandable, predictable, and verifiable. If a safety engineer cannot explain why an AI system made a particular decision, it becomes impossible to build a robust safety case.

Explainable AI (XAI) is an emerging field that aims to address this by developing techniques to make AI models more interpretable. XAI methods can, for example, highlight the specific input features that most influenced a model’s output. However, XAI is still in its early stages, and the explanations it provides can sometimes be superficial or even misleading. For safety-critical applications, a much higher standard of explainability is required.

Verification and Validation of AI-based Systems

The V&V of traditional software relies on a well-defined set of requirements and test cases. For AI systems, this becomes exponentially more difficult. The input space for many AI applications, such as an autonomous vehicle’s perception system, is effectively infinite. It is impossible to test for every possible combination of lighting, weather, and road conditions. This raises the critical question of how to provide sufficient evidence that an AI system will behave safely in novel situations it has not been explicitly trained on.

Formal verification methods, which use mathematical techniques to prove the correctness of a system, are being explored for neural networks. However, these methods are currently limited to relatively small and simple models and are not yet scalable to the large, complex networks used in many real-world applications.

Compliance with Existing Functional Safety Standards

Functional safety standards like ISO 26262 for the automotive industry and IEC 61508 for industrial systems were written with traditional, deterministic hardware and software in mind. These standards rely on concepts like Safety Integrity Levels (SILs) and Automotive Safety Integrity Levels (ASILs), which are based on the assumption that system failures can be categorized and their probabilities quantified in a predictable manner.

The probabilistic and non-deterministic nature of AI does not fit neatly into this framework. For example, how do you assign a SIL or ASIL to a deep learning model that has a certain inherent error rate? How do you account for the possibility of the model making an unsafe decision even when all its hardware and software components are functioning correctly? Standards bodies are actively working on adapting these standards for AI, but a clear consensus has yet to emerge.

Data Dependency and Brittleness

AI models are not programmed in the traditional sense; they are trained on data. This means that the quality, completeness, and representativeness of the training data are paramount. Biased or incomplete data can lead to an AI model that performs well in the lab but behaves in an unsafe and unpredictable manner in the real world. For example, a perception system for an autonomous vehicle that is primarily trained on data from sunny, clear days may fail catastrophically in foggy or snowy conditions.

Furthermore, AI models can exhibit a property known as “brittleness.” This means that small, often imperceptible, changes to the input can lead to large and erroneous changes in the output. This vulnerability can be exploited in “adversarial attacks,” where malicious actors intentionally craft inputs to deceive an AI system into making an unsafe decision.

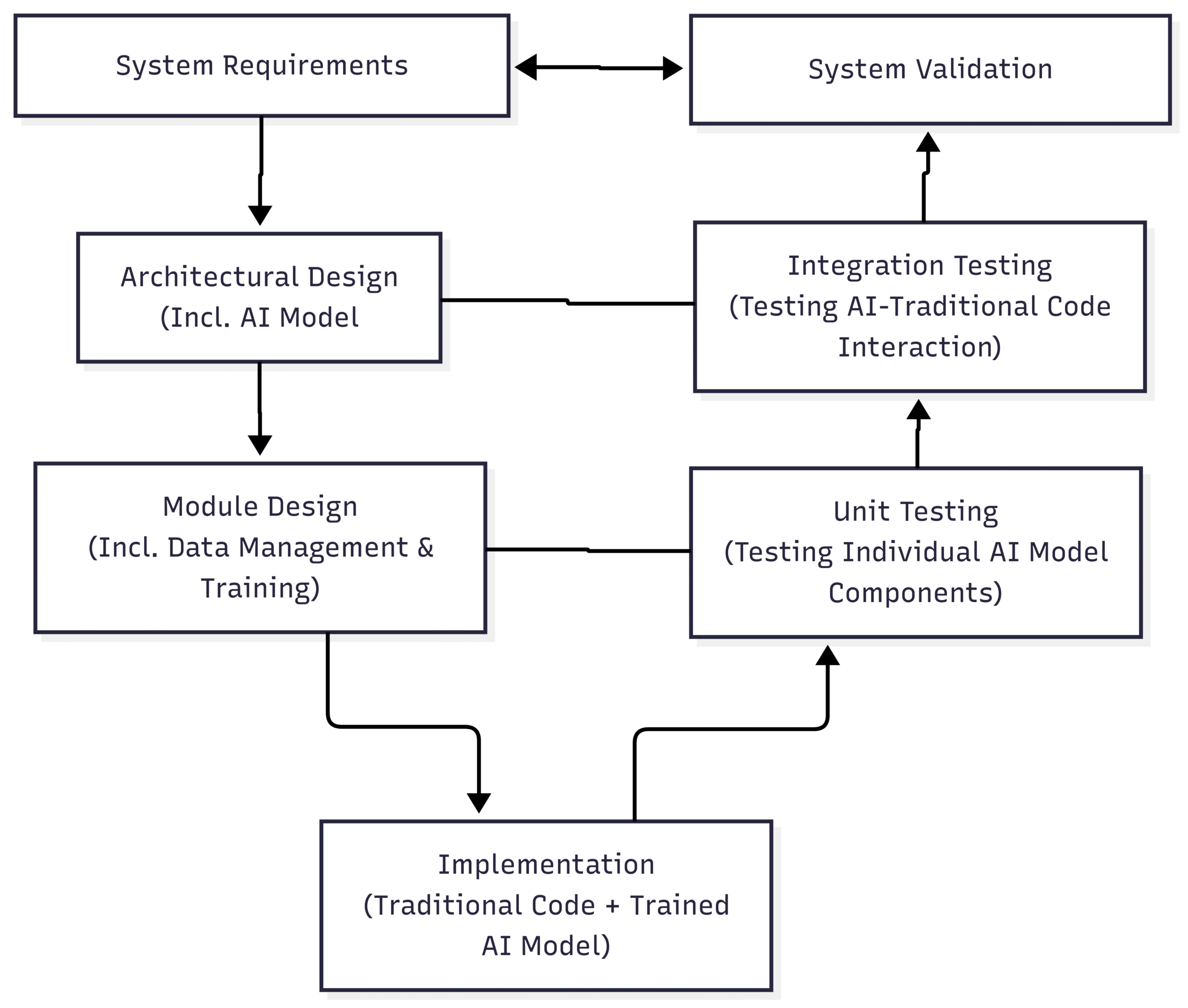

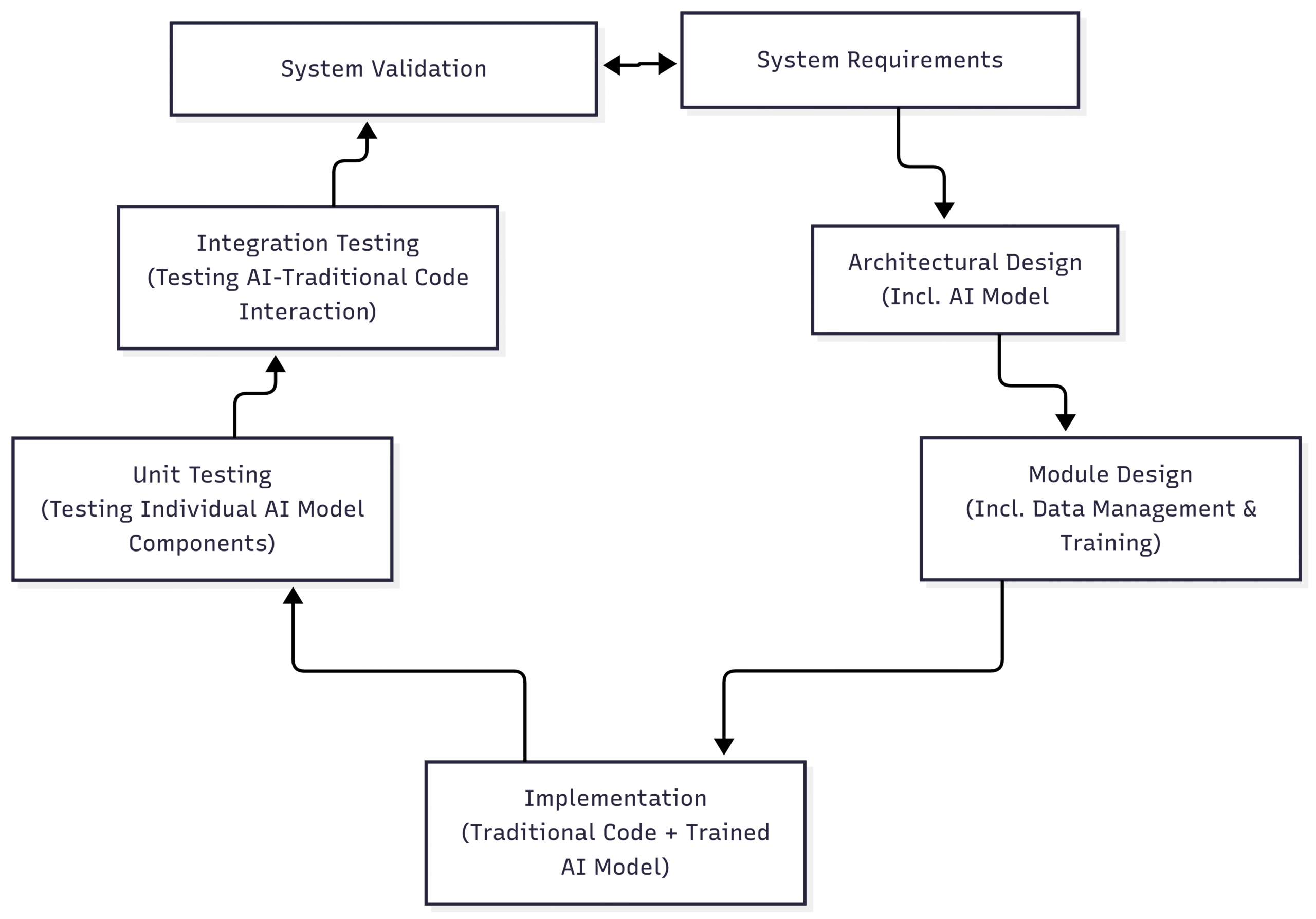

Block Diagram 2: The Challenge of Integrating AI into the Traditional V-Model

The traditional V-model is a widely used framework for developing and testing safety-critical systems. Integrating AI into this model highlights the unique challenges it presents:

On the left side of the V-model, the design and development of an AI-based system introduces new complexities. “Module Design” now includes the critical tasks of data collection, cleaning, and labeling, as well as the selection and training of the AI model. On the right side, the V&V activities face significant hurdles. “Unit Testing” an AI model is not as straightforward as testing a traditional software module. “Integration Testing” must not only verify the interaction between software components but also the interaction between the AI’s probabilistic outputs and the deterministic parts of the system. Finally, “System Validation” must somehow provide confidence in the AI’s performance across an almost infinite range of real-world scenarios.

Bridging the Gap: The Path Forward

Overcoming the challenges of integrating AI into functional safety will require a multi-faceted approach that combines technological innovation, evolving standards, and a robust safety culture. A complete reliance on AI for safety-critical decisions is, for the foreseeable future, untenable. Instead, a more pragmatic, hybrid approach is needed.

A Hybrid Approach: AI as a Supervisor, Not the Sole Authority

One promising strategy is to use AI as a powerful advisor or supervisor, while a simpler, more verifiable system retains ultimate control. In this model, the AI system can analyze complex situations and provide recommendations to a human operator or to a traditional, deterministic safety system. This approach leverages the strengths of AI in pattern recognition and data analysis without entrusting it with the final, safety-critical decision.

Another concept gaining traction is the “safety cage” or “safety monitor.” This is an independent, verifiable system that runs in parallel with the AI. The safety cage continuously monitors the inputs and outputs of the AI system and compares them against a set of predefined, hard-coded safety rules. If the AI’s behavior violates these rules or deviates from a safe operational envelope, the safety cage has the authority to intervene, either by overriding the AI’s decision or by transitioning the entire system to a safe state.

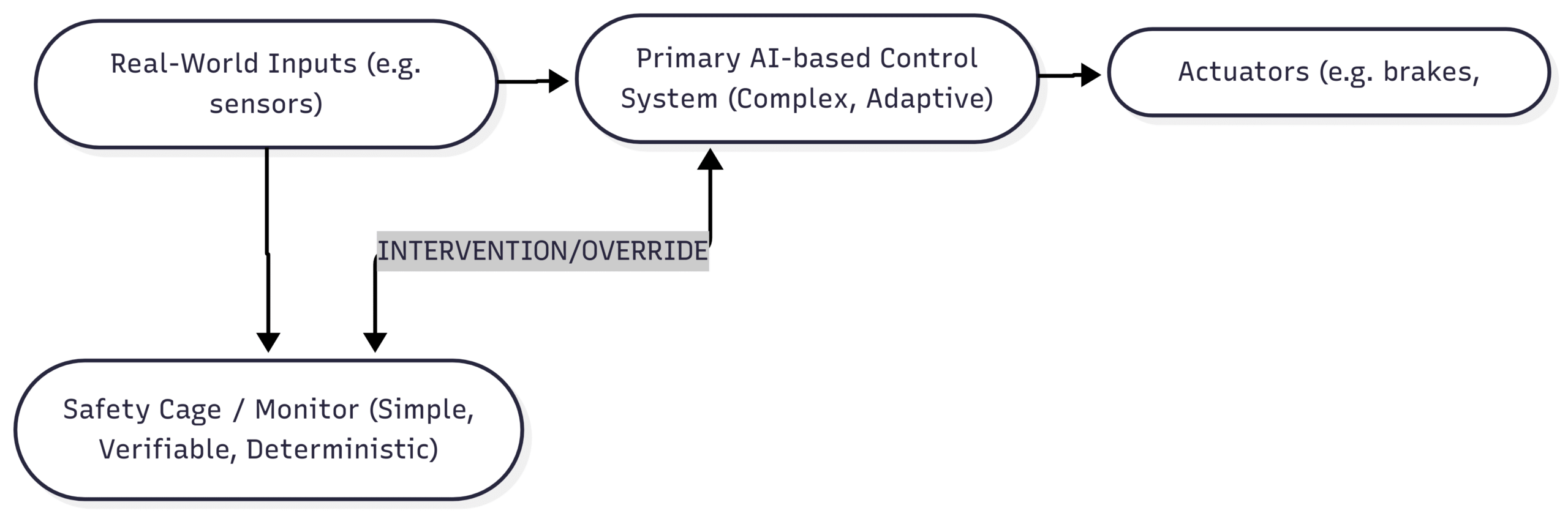

Block Diagram 3: AI with a Safety Cage/Monitor

This block diagram illustrates the concept of a safety cage:

In this architecture, the primary control system is a complex and adaptive AI model. However, its outputs are not sent directly to the actuators. Instead, a simpler, verifiable safety cage monitors both the AI’s inputs and its proposed actions. If the safety cage detects a potential violation of predefined safety constraints (e.g., an autonomous vehicle attempting to accelerate towards a red light), it can intervene and prevent the unsafe action from occurring. This provides a critical layer of deterministic safety around the probabilistic AI.

The Evolution of Standards and Regulation

The international standards bodies that govern functional safety are actively working to address the challenges posed by AI. New standards and technical reports are being developed to provide guidance on how to manage the safety lifecycle of AI-based systems. This will likely involve a new paradigm for certification that moves beyond the traditional, deterministic framework and incorporates concepts like statistical confidence, runtime monitoring, and operational design domains (ODDs), which define the specific conditions under which an AI system is designed to operate safely.

The Importance of a Robust Safety Culture

Ultimately, technology and standards alone are not enough. Organizations that develop and deploy AI in safety-critical applications must foster a deep-seated safety culture. This means ensuring that everyone involved, from data scientists and software engineers to project managers and executives, understands the unique risks and benefits of AI. It requires a commitment to transparency, a willingness to invest in rigorous V&V processes, and a recognition that when it comes to safety, there are no shortcuts.

Conclusion

The marriage of AI and functional safety is a complex one, filled with both exhilarating promise and sobering challenges. AI has the potential to usher in a new era of proactive, predictive, and intelligent safety systems that can prevent accidents and save lives in ways we are only beginning to imagine. However, the path to realizing this potential is not a simple one. We must proceed with a healthy dose of caution, a commitment to rigorous engineering, and a clear-eyed understanding of the limitations of current AI technology.

The “black box” must be opened, the complexities of verification and validation must be untangled, and our standards and regulations must evolve. By embracing hybrid approaches like the safety cage, fostering a strong safety culture, and encouraging collaboration between the worlds of AI and functional safety, we can navigate these challenges. The future of safety will not be a choice between human intuition and artificial intelligence, but rather a powerful synergy between the two, working in concert to create a safer world for us all.